How is Data Normalization Implemented in Corner Bowl Server Manager?

According to Wikipedia, “Database normalization is the process of structuring a relational database in accordance with a series of so-called normal forms in order to reduce data redundancy and improve data integrity. It was first proposed by British computer scientist Edgar F. Codd as part of his relational model.”

Normal forms

Codd defined the following normal forms in 1970 1NF, 2NF and 3NF.

First Normal Form (1NF)

All operating system and application log entries are unique and therefore each inserted row always follows First Normal Form (1NF).

Second Normal Form (2NF)

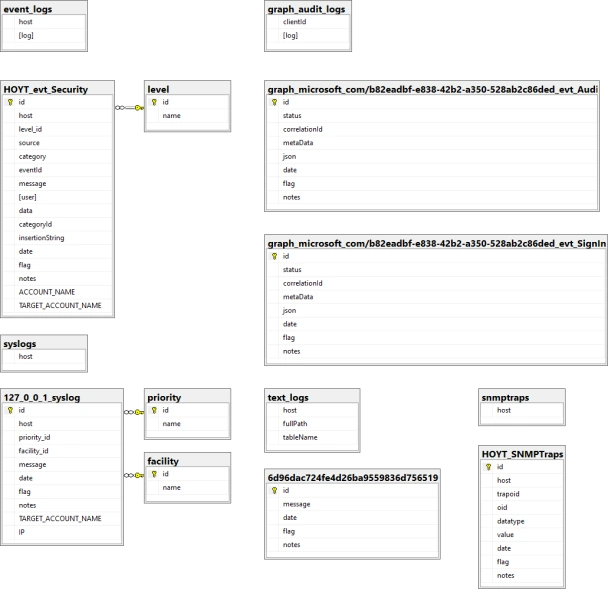

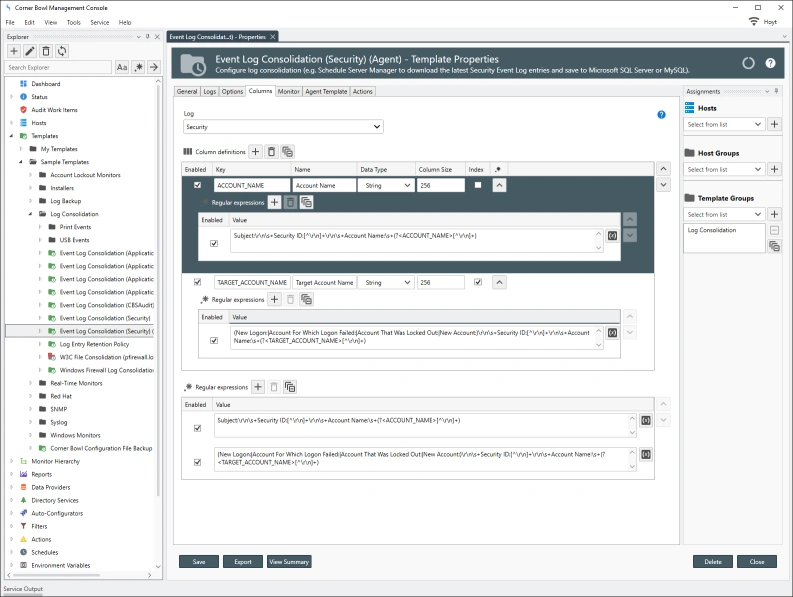

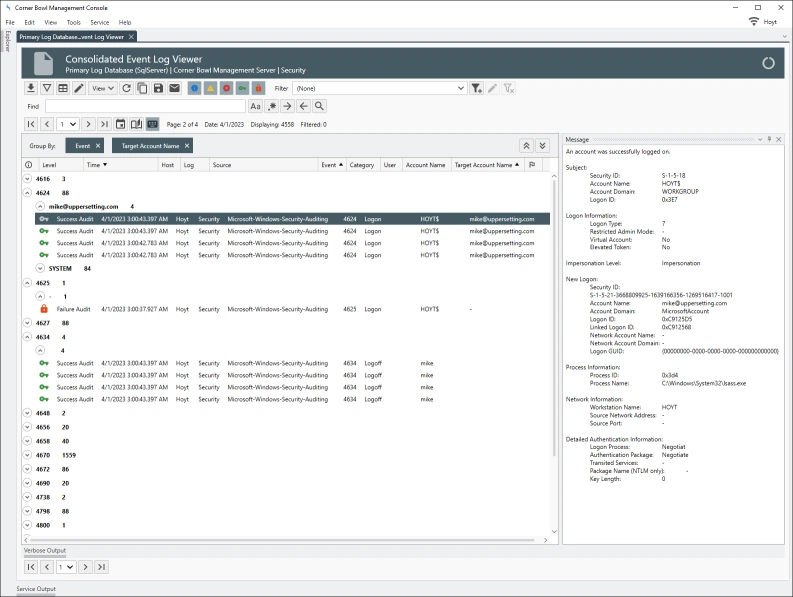

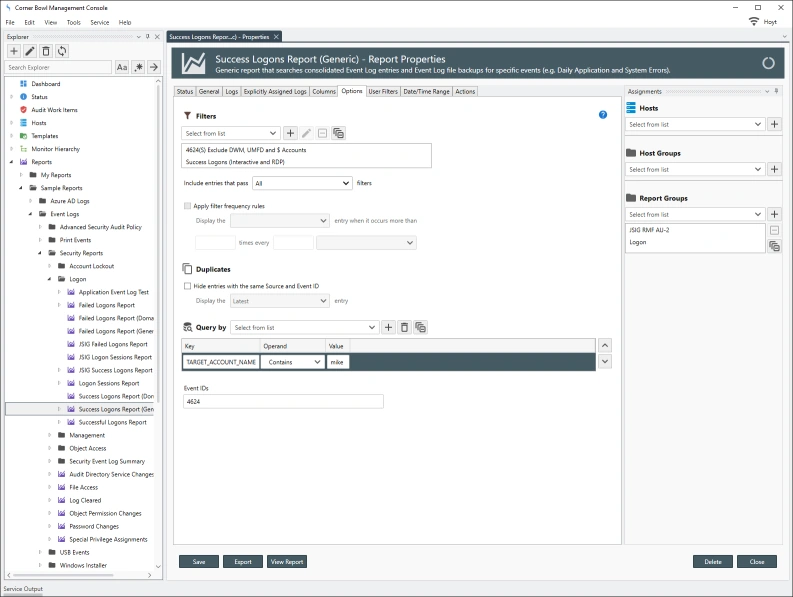

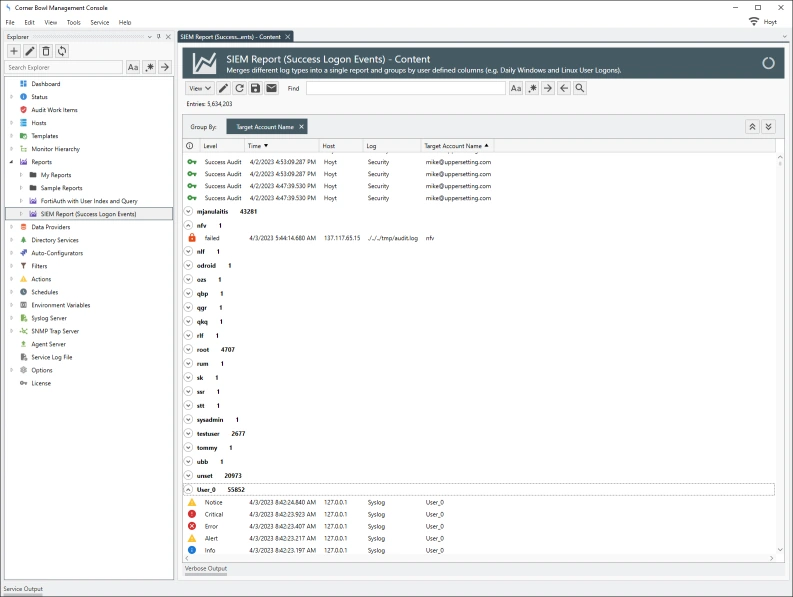

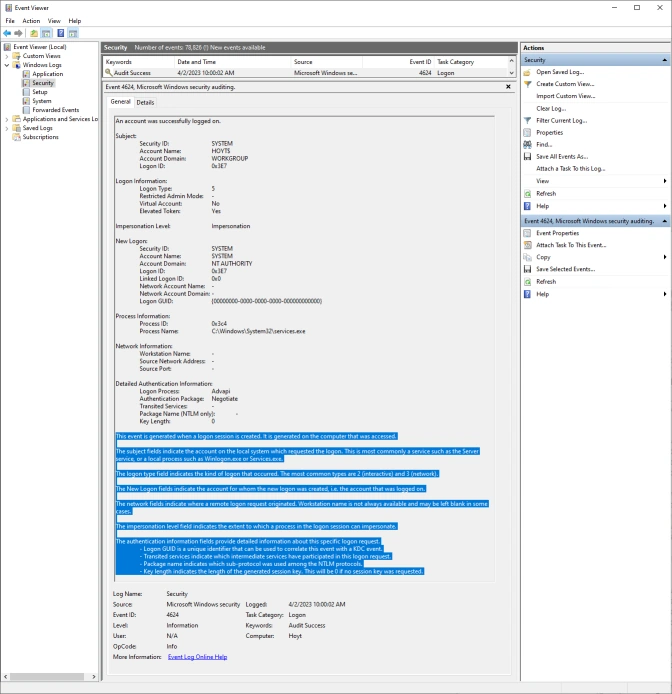

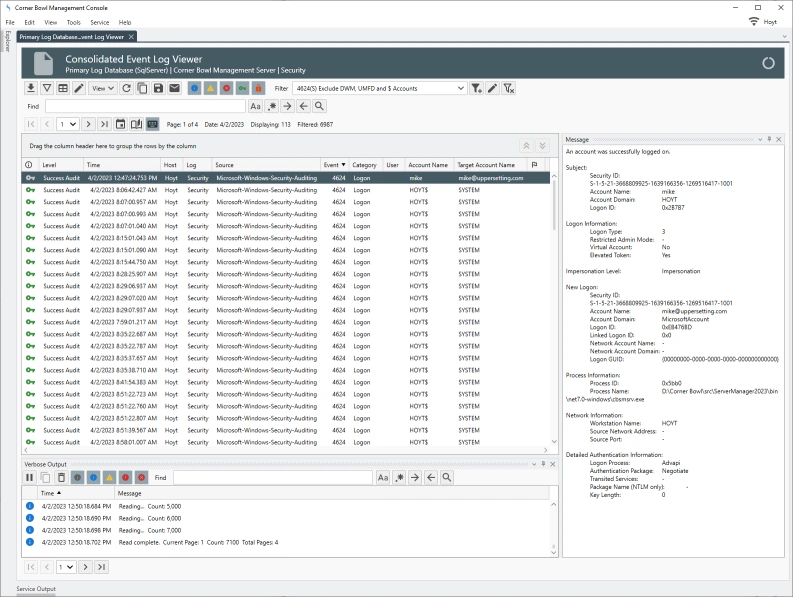

Since each row is unique, 2NF does not apply to log entries, however, some entries embed duplicate information, such as Microsoft’s Security Event Log entries, that do not need to be saved. In the example below we can see several large informational paragraphs appended to the end of the unique message. Corner Bowl Server Manager parses specific Security Event Log Entry messages then removes the duplicated informational paragraphs from each message prior to saving the entry to the central log database. This is also known as data cleansing.

Third Normal Form (3NF)

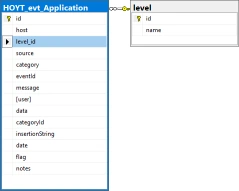

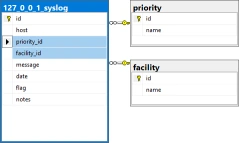

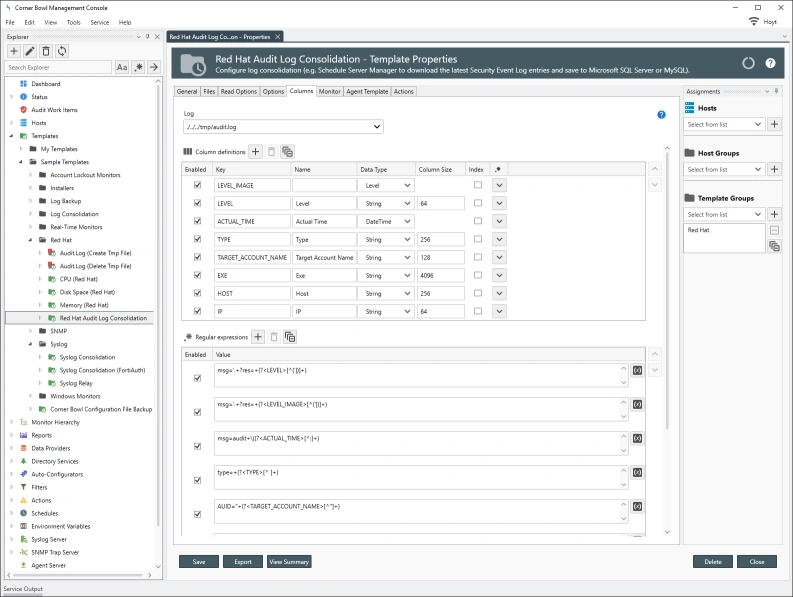

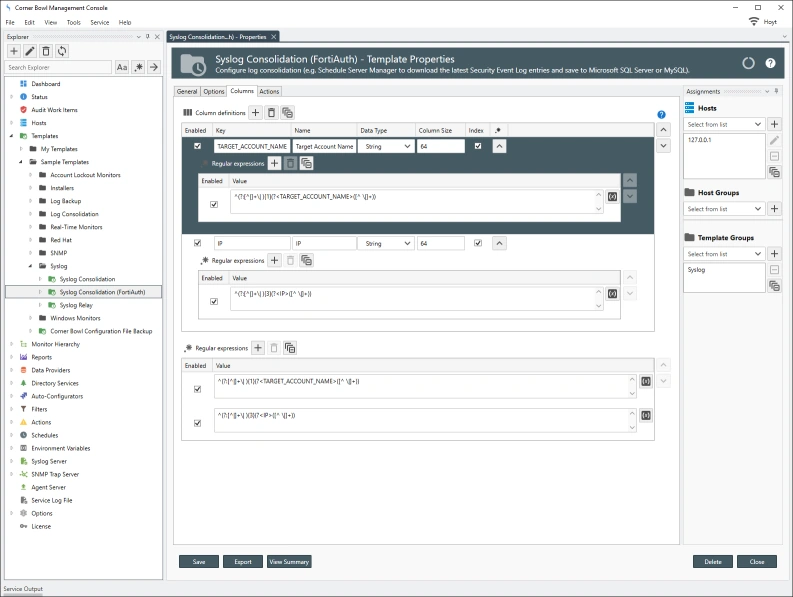

Event Levels, Informational, Warning and Error, and Syslog Priorities, debug, info notice, warn, critical, error, emergency and alert, are stored in their own tables then foreign key values assigned in each saved log entry significantly reducing duplicated data in each saved log entry.